Ollama on Windows with Intel A770 WSL

My main pc is a AMD Ryzen 9 7900x with a Intel A770 on Windows 11. With all the hype around AI I wanted to play around with some models locally and without dual booting. Here are the steps I used to get Ollama and Open Webui to work.

Install WSL (Use windows store, or google it) , along install docker desktop or rancher desktop

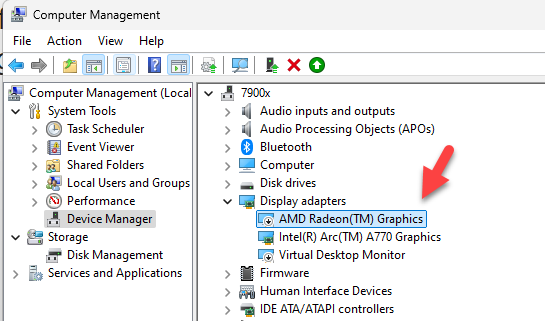

Disable the AMD Radeon Graphics , if you don't do this WSL Ubuntu instance will not recognized the A770.

Install the packages and drivers required as defined

WSL> sudo apt-get install intel-opencl-icd intel-level-zero-gpu level-zero intel-media-va-driver-non-free libmfx1 libmfxgen1 libvpl2 libegl-mesa0 libegl1-mesa libegl1-mesa-dev libgbm1 libgl1-mesa-dev libgl1-mesa-dri libglapi-mesa libgles2-mesa-dev libglx-mesa0 libigdgmm11 libxatracker2 mesa-va-drivers mesa-vdpau-drivers mesa-vulkan-drivers va-driver-all

Restart WSL

PS C:\Users\james> wsl --shutdownCheck to see if you see the video card

PS C:\Users\james> wsl

majerus@7900x:/mnt/c/Users/james$ glxinfo | grep "OpenGL renderer string"

OpenGL renderer string: D3D12 (Intel(R) Arc(TM) A770 Graphics)

majerus@7900x:/mnt/c/Users/james$Now deploy the container

docker run -itd --net=bridge --device=/dev/dri --privileged -p 11434:11434 -v E:\AI_Models:/root/.ollama/models -v /usr/lib/wsl:/usr/lib/wsl -e ZES_ENABLE_SYSMAN=1 -e no_proxy=localhost,127.0.0.1 --memory="32G" -e DEVICE=Arc --shm-size="16g" -e OLLAMA_HOST=0.0.0.0 -e no_proxy=localhost,127.0.0.1 --name=ipex-llm intelanalytics/ipex-llm-inference-cpp-xpu:latest bash -c "cd /llm/scripts && source ipex-llm-init --gpu --device `$DEVICE && bash start-ollama.sh && tail -f /llm/ollama/ollama.log"Notice the `$DEVICE keeps powershell from killing command, also models path use whatever makes sense on your system.

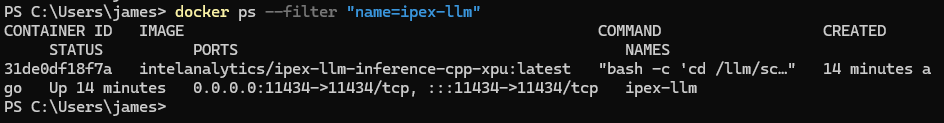

Verify container is running

docker ps --filter "name=ipex-llm"

Start up openweb ui container

docker run -d -p 3000:8080 -e OLLAMA_BASE_URL=<MACHINEIPADDRESS>:11434 -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main<MACHINEIPADDRESS> should be the machine your running ollama on ie 192.168.1.42

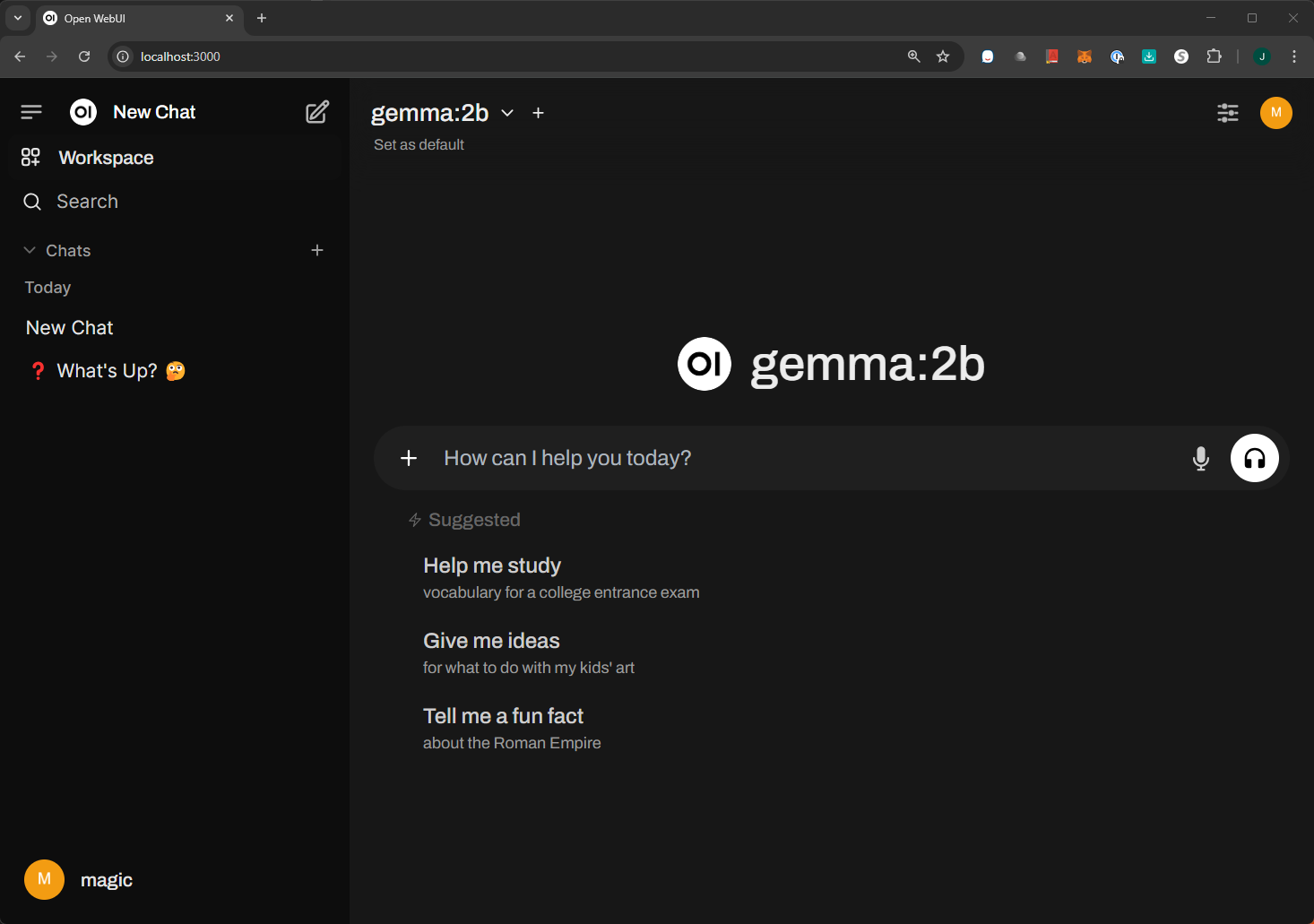

Hit the WebUI and start playing with models.

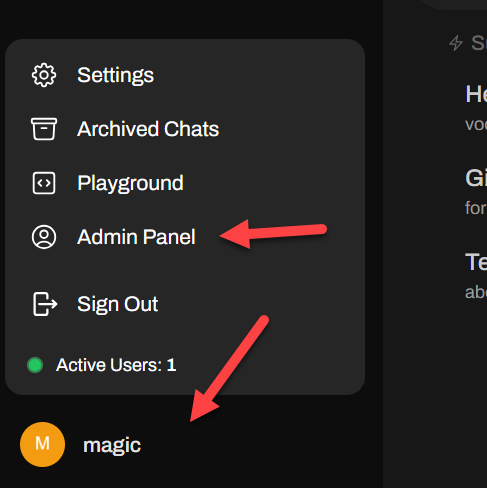

You will need to create an account, and such which the ui will prompt you for. Once you do that you should see something similar to

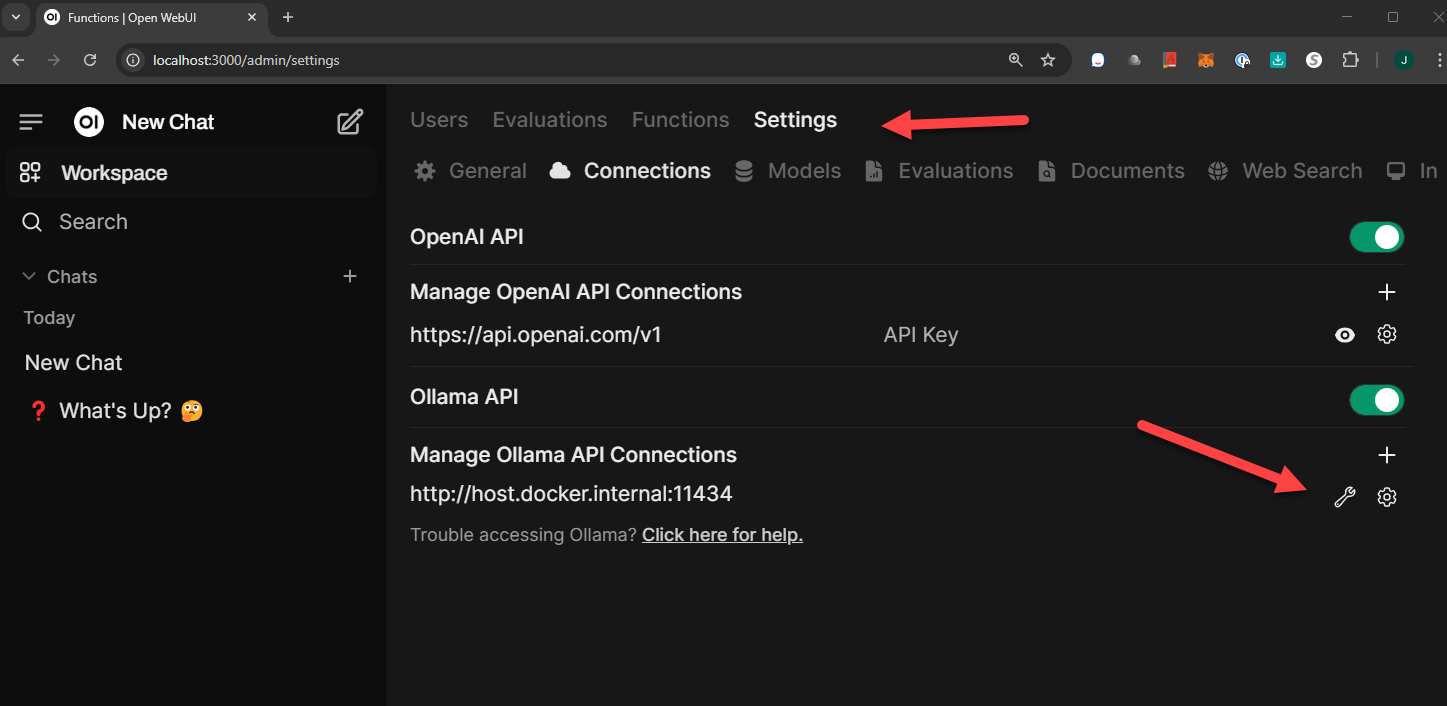

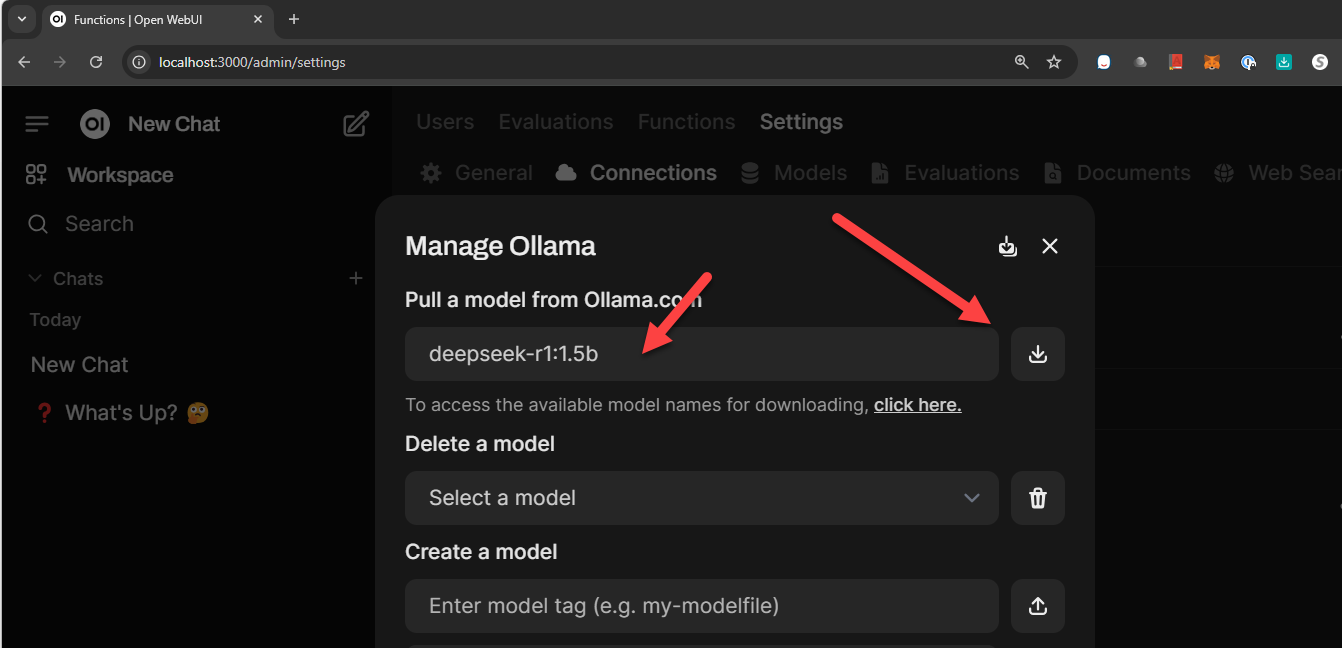

Lets add a model

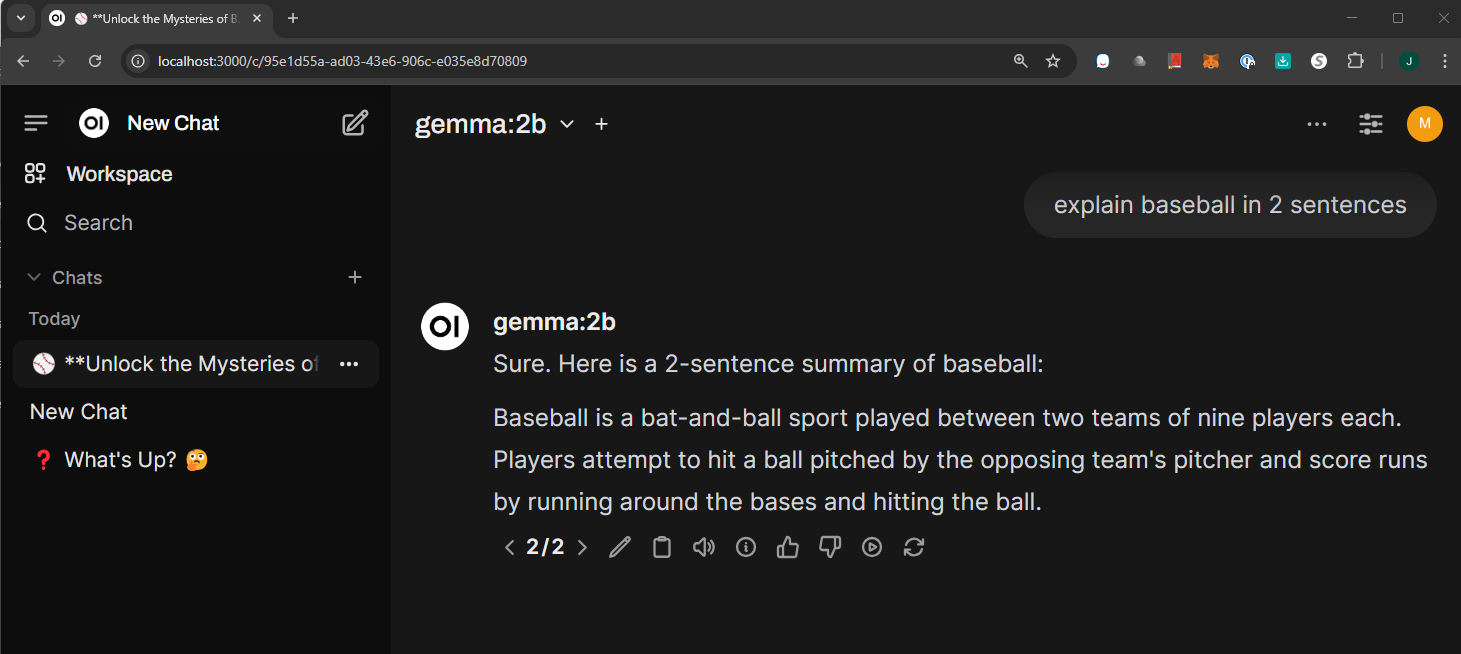

Now its time to use the model

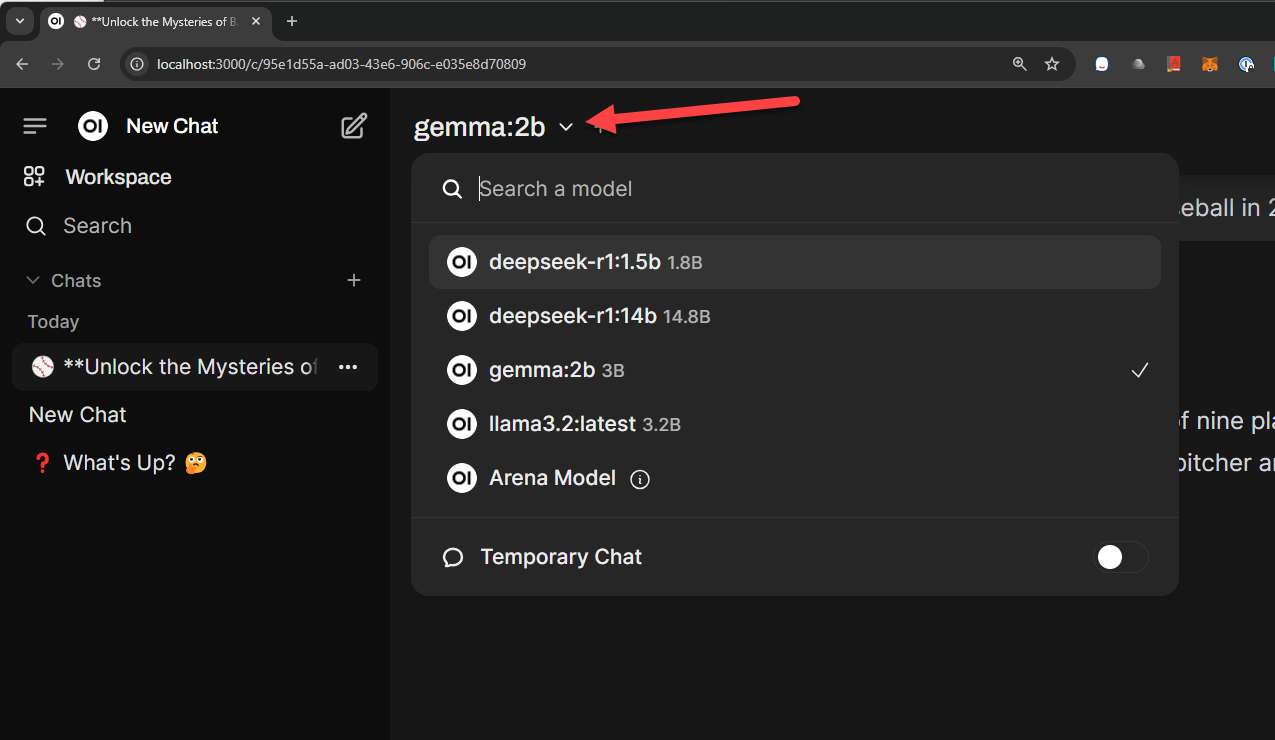

You can pick which model you want from the drop down

Overall this was a huge mess to get working over the weekend. There are a bunch of links and things I worked on to get this to the point it is. One key detail -e OLLAMA_INTEL_GPU=true will cause this to crash. Do not enable this setting

Links:

- Github

- Blogs

- Cover Photo

- Photo by Neeqolah Creative Works / Unsplash